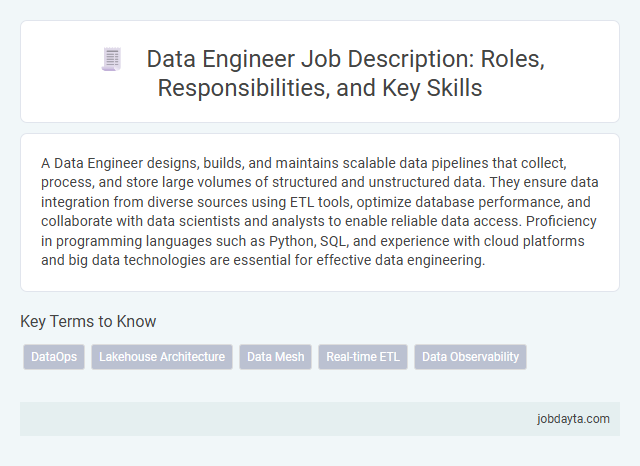

A Data Engineer designs, builds, and maintains scalable data pipelines that collect, process, and store large volumes of structured and unstructured data. They ensure data integration from diverse sources using ETL tools, optimize database performance, and collaborate with data scientists and analysts to enable reliable data access. Proficiency in programming languages such as Python, SQL, and experience with cloud platforms and big data technologies are essential for effective data engineering.

Overview of a Data Engineer Role

What are the core responsibilities of a Data Engineer? Data Engineers design, construct, and maintain scalable data pipelines that ensure seamless data flow across systems. Their work enables organizations to process, store, and analyze large volumes of data efficiently.

How does a Data Engineer contribute to data quality and reliability? By implementing robust ETL (Extract, Transform, Load) processes, Data Engineers cleanse and transform raw data into usable formats. They also monitor pipeline performance and troubleshoot data discrepancies to maintain data integrity.

What technical skills are essential for a Data Engineer? Proficiency in programming languages like Python, SQL, and Scala is crucial. Expertise in big data technologies such as Hadoop, Spark, and cloud platforms like AWS or Azure supports advanced data processing tasks.

How does a Data Engineer support data analytics teams? Data Engineers build and optimize data architecture that facilitates real-time and batch data access. This infrastructure allows data scientists and analysts to generate insights and develop predictive models effectively.

Why is your role as a Data Engineer vital in modern enterprises? You bridge the gap between raw data and actionable intelligence by creating efficient data workflows. This foundation empowers business decisions driven by accurate and timely information.

Key Responsibilities of a Data Engineer

Data Engineers design, construct, and maintain scalable data pipelines to collect, process, and store large volumes of data. They ensure data quality and reliability to support analytics and business intelligence solutions.

They collaborate with data scientists and analysts to optimize data flow and integration from diverse sources. Data Engineers also implement data security measures and monitor pipeline performance for continuous improvement.

Essential Technical Skills for Data Engineers

Data Engineers play a crucial role in building and maintaining the infrastructure for data generation, storage, and processing. Mastery of specific technical skills is essential to ensure efficient data pipeline creation and management.

- Programming Proficiency - Expertise in languages such as Python, Java, or Scala is vital for developing data processing workflows and automation scripts.

- Database Management - Strong knowledge of SQL and NoSQL databases enables effective data storage, retrieval, and manipulation across diverse systems.

- Data Pipeline Orchestration - Familiarity with tools like Apache Airflow and Apache NiFi allows seamless scheduling, monitoring, and data flow management.

Common Tools and Technologies Used

Data Engineers rely on tools like Apache Hadoop and Spark for large-scale data processing and analytics. They utilize databases such as PostgreSQL, Cassandra, and MongoDB to manage diverse data storage needs. Cloud platforms including AWS, Google Cloud, and Azure provide scalable infrastructure and services essential for modern data engineering workflows.

Data Pipeline Development and Management

Data Engineers specialize in designing, building, and maintaining efficient data pipelines that enable seamless data flow from various sources to storage systems. These pipelines ensure data is accurately processed, transformed, and made accessible for analysis and business intelligence.

Pipeline development involves selecting appropriate tools and technologies to handle large-scale data ingestion, validation, and integration. Effective management of these pipelines guarantees data reliability, scalability, and optimal performance for your organization's data infrastructure.

Collaboration with Data Scientists and Analysts

Data Engineers play a critical role in building and maintaining the infrastructure that supports data analytics. They collaborate closely with Data Scientists and Analysts to ensure data quality and accessibility.

By designing robust data pipelines, Data Engineers enable Data Scientists to focus on creating predictive models and deriving insights. They work together to identify data requirements and optimize data storage solutions. This collaboration drives efficient decision-making processes and accelerates innovation within organizations.

Data Warehousing and ETL Processes

Data Engineers specialize in designing and managing data warehousing solutions that support efficient storage and retrieval of massive datasets. Expertise in ETL processes is essential to transform and load data accurately across various platforms.

- Data Warehousing Architecture - Design scalable and optimized data warehouses to ensure high performance and data integrity.

- ETL Pipeline Development - Build robust ETL workflows to automate data extraction, transformation, and loading from multiple sources.

- Data Quality Assurance - Implement validation and cleansing techniques to maintain reliable and consistent data for analytics.

Your role as a Data Engineer ensures seamless integration of complex data ecosystems to empower data-driven decision making.

Importance of Data Quality and Governance

Data quality and governance are critical pillars in engineering robust data systems. Ensuring accuracy, consistency, and compliance in data sets empowers Data Engineers to build reliable analytics and decision-making tools.

- Data Accuracy - Maintaining precise and error-free data enhances trust and usability across all engineering projects.

- Regulatory Compliance - Effective governance aligns data handling with industry standards, reducing legal and operational risks.

- Data Accessibility - Proper governance frameworks facilitate secure and efficient access to data resources for your engineering teams.

Required Educational Background and Certifications

| Aspect | Details |

|---|---|

| Required Educational Background | Bachelor's degree in Computer Science, Software Engineering, Information Technology, or related fields. Advanced degrees such as a Master's in Data Engineering, Big Data, or Analytics provide a competitive advantage. Courses in database management, data warehousing, cloud computing, and programming languages like Python, Java, or Scala are essential for foundational knowledge. |

| Certifications | Industry certifications enhance expertise and credibility. Key certifications include Google Cloud Professional Data Engineer, Microsoft Certified: Azure Data Engineer Associate, AWS Certified Data Analytics - Specialty, and Cloudera Certified Data Engineer. Certifications in big data technologies such as Apache Hadoop, Apache Spark, and Kafka also add value to your professional profile. |

Career Growth and Opportunities in Data Engineering

Data Engineering is a rapidly evolving field essential for managing and optimizing big data infrastructure. Career growth in data engineering offers opportunities to work with cutting-edge technologies like Apache Spark, Kafka, and cloud platforms such as AWS and Azure. Your expertise in building scalable data pipelines and ensuring data quality positions you for roles in diverse industries including finance, healthcare, and technology.

Related Important Terms

DataOps

Data Engineers specializing in DataOps streamline data pipeline automation, ensuring continuous integration and delivery of high-quality, scalable datasets. They leverage tools like Apache Airflow, Kubernetes, and Jenkins to enhance collaboration between development and operations teams, optimizing data workflows and reducing deployment errors.

Lakehouse Architecture

Data Engineers specializing in Lakehouse Architecture design and manage unified data platforms that combine the reliability and structure of data warehouses with the flexibility and scalability of data lakes. They optimize data ingestion, storage, and processing pipelines to enable seamless analytics and machine learning workflows on large-scale, diverse datasets.

Data Mesh

Data Engineers play a crucial role in implementing Data Mesh architecture by designing decentralized data pipelines that enable domain-oriented data ownership and self-serve data infrastructure. They ensure scalable, reliable data integration across distributed teams, facilitating data discoverability and governance within complex organizational ecosystems.

Real-time ETL

Data Engineers specializing in Real-time ETL design and implement robust pipelines that process streaming data with minimal latency, ensuring continuous data integration from diverse sources such as IoT devices, social media, and transactional databases. They leverage technologies like Apache Kafka, Apache Flink, and Spark Streaming to optimize data ingestion, transformation, and delivery in scalable cloud environments, enabling real-time analytics and decision-making.

Data Observability

Data Engineers leverage data observability tools to monitor pipeline health, ensuring data accuracy, reliability, and timely anomaly detection. Implementing metrics such as data freshness, volume, and schema changes enhances proactive issue resolution and maintains data infrastructure integrity.

Data Engineer Infographic

jobdayta.com

jobdayta.com